tensorflow.keras metrics应用

tensorflow.keras metrics应用

不使用metrics实现的博客参考:

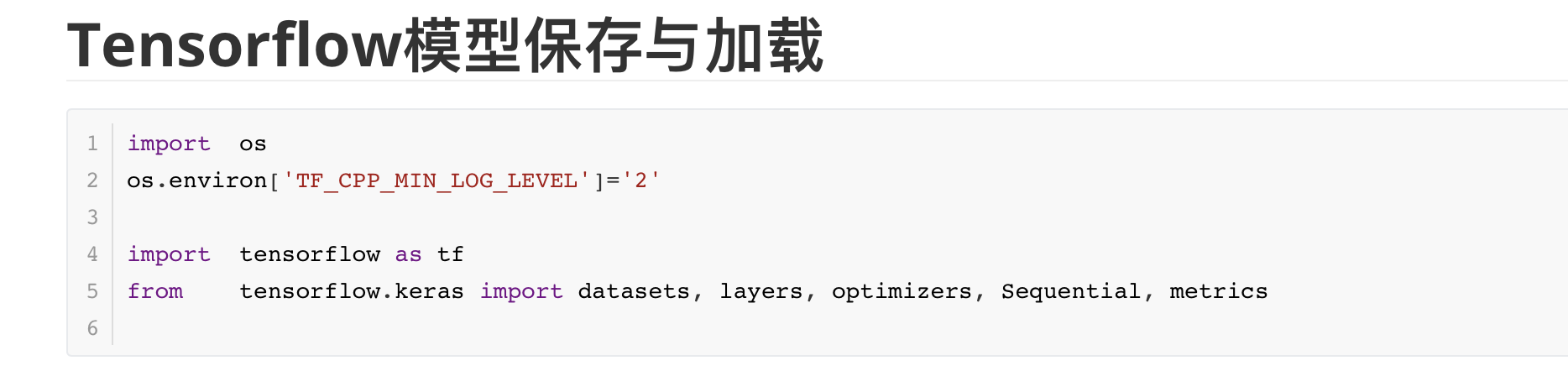

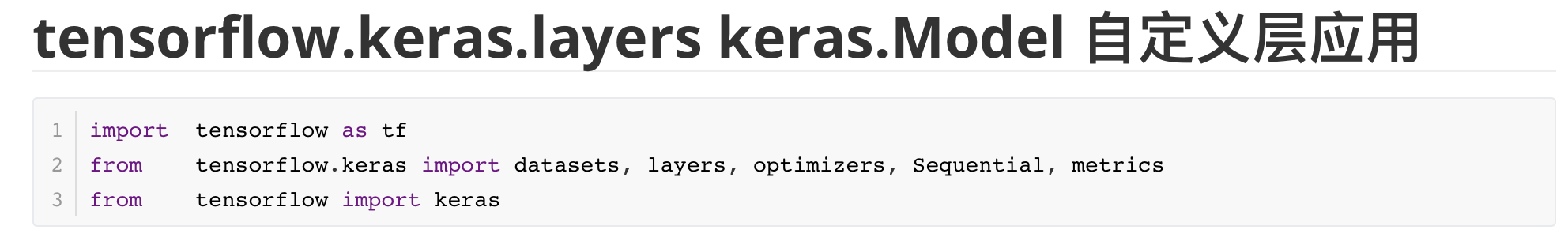

1 | import tensorflow as tf |

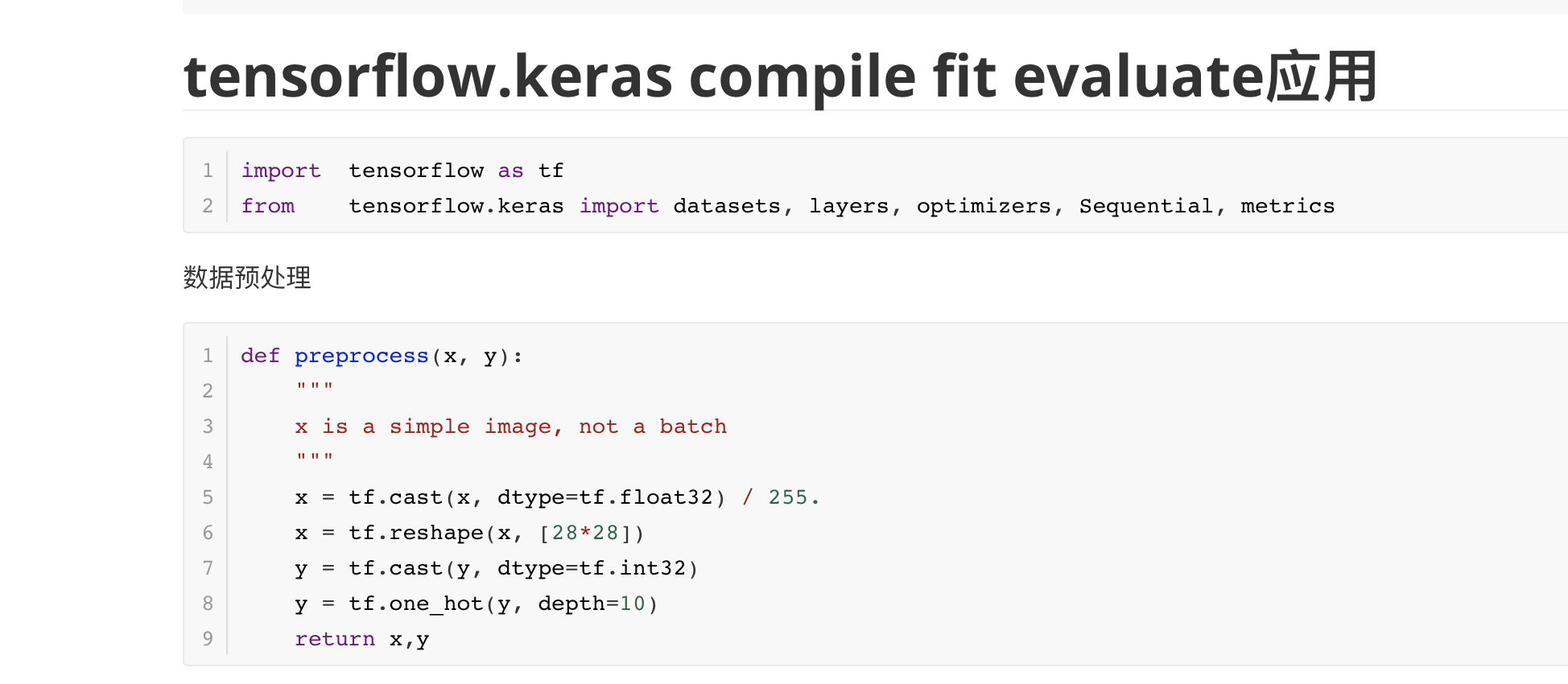

更改数据类型并归一化

1 | def preprocess(x, y): |

加载数据集并进行预处理

1 | batchsz = 128 |

datasets: (60000, 28, 28) (60000,) 0 255

构建模型

1 | network = Sequential([layers.Dense(256, activation='relu'), |

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense (Dense) multiple 200960

_________________________________________________________________

dense_1 (Dense) multiple 32896

_________________________________________________________________

dense_2 (Dense) multiple 8256

_________________________________________________________________

dense_3 (Dense) multiple 2080

_________________________________________________________________

dense_4 (Dense) multiple 330

=================================================================

Total params: 244,522

Trainable params: 244,522

Non-trainable params: 0

_________________________________________________________________

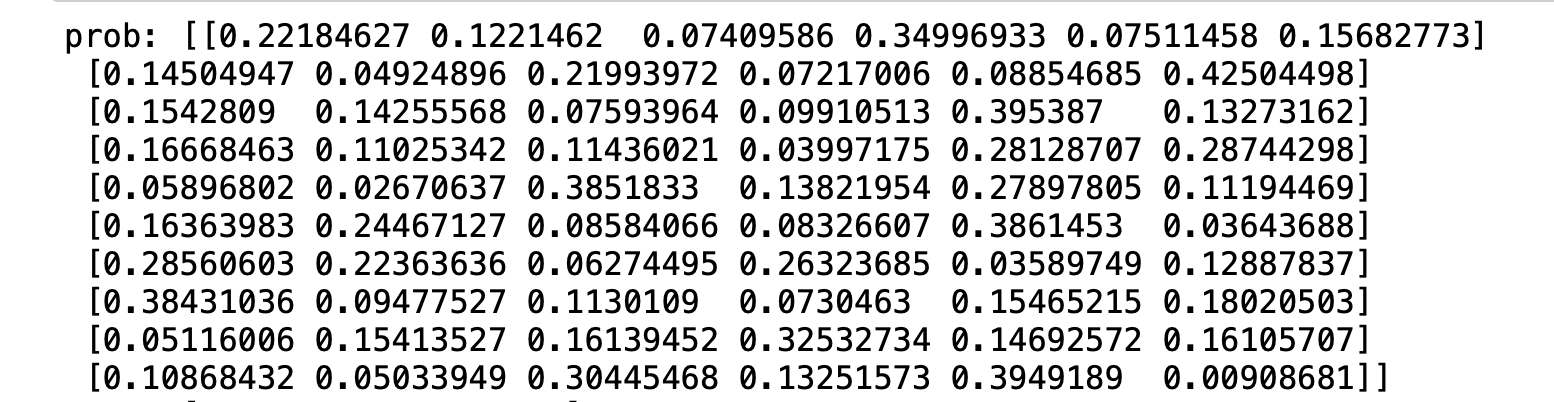

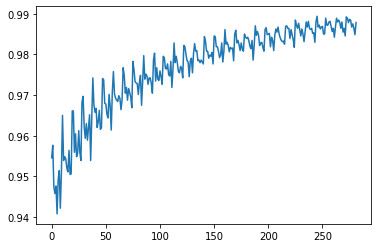

模型训练并应用metrics

1 | # 使用metrics计算准确率和loss的均值 |

0 loss_meter: 0.0147538865 loss: tf.Tensor(0.0147538865, shape=(), dtype=float32)

78 Evaluate Acc: 0.9743 0.9743

100 loss_meter: 0.04805827 loss: tf.Tensor(0.041749448, shape=(), dtype=float32)

200 loss_meter: 0.053051163 loss: tf.Tensor(0.0131300185, shape=(), dtype=float32)

300 loss_meter: 0.07365899 loss: tf.Tensor(0.0278976, shape=(), dtype=float32)

400 loss_meter: 0.07007911 loss: tf.Tensor(0.05961611, shape=(), dtype=float32)

500 loss_meter: 0.059413455 loss: tf.Tensor(0.04578472, shape=(), dtype=float32)

78 Evaluate Acc: 0.976 0.976

600 loss_meter: 0.045514174 loss: tf.Tensor(0.1006662, shape=(), dtype=float32)

700 loss_meter: 0.05224053 loss: tf.Tensor(0.061094068, shape=(), dtype=float32)

800 loss_meter: 0.06696898 loss: tf.Tensor(0.08473669, shape=(), dtype=float32)

900 loss_meter: 0.06490257 loss: tf.Tensor(0.05812662, shape=(), dtype=float32)

1000 loss_meter: 0.056545332 loss: tf.Tensor(0.10347018, shape=(), dtype=float32)

78 Evaluate Acc: 0.9745 0.9745

1100 loss_meter: 0.0515905 loss: tf.Tensor(0.045466803, shape=(), dtype=float32)

1200 loss_meter: 0.06225908 loss: tf.Tensor(0.046285823, shape=(), dtype=float32)

1300 loss_meter: 0.057779107 loss: tf.Tensor(0.05366286, shape=(), dtype=float32)

1400 loss_meter: 0.06661249 loss: tf.Tensor(0.10940219, shape=(), dtype=float32)

1500 loss_meter: 0.059498344 loss: tf.Tensor(0.05784896, shape=(), dtype=float32)

78 Evaluate Acc: 0.974 0.974

1600 loss_meter: 0.06252271 loss: tf.Tensor(0.0287047, shape=(), dtype=float32)

1700 loss_meter: 0.060016934 loss: tf.Tensor(0.006867729, shape=(), dtype=float32)

1800 loss_meter: 0.05751593 loss: tf.Tensor(0.13693535, shape=(), dtype=float32)

1 |

本博客所有文章除特别声明外,均采用 CC BY-NC-SA 4.0 许可协议。转载请注明来自 没有胡子的猫Asimok!

评论