tag-based-multi-span-extraction

tag-based-multi-span-extraction

代码:https://github.com/eladsegal/tag-based-multi-span-extraction

论文:A Simple and Effective Model for Answering Multi-span Questions

- 配置环境变量添加代理

1 | scp -r zhaoxiaofeng@219.216.64.175:~/.proxychains ./ |

修改~/.bashrc,在末尾添加指令别名

1 | alias proxy=/data0/zhaoxiaofeng/usr/bin/proxychains4 # 77, 175, 206只添加这条 |

- 下载代码:

1 | git clone https://github.com/eladsegal/tag-based-multi-span-extraction |

- 配置环境

1 | proxy conda create -n allennlp python=3.6.9 |

训练模型

可以使用nohup + &在后台训练

tail -f nohup.txt 可以实时查看日志

nohup command >> nohup.out 2>&1 &

- 2>&1的意思是将标准错误(2)也定向到标准输出(1)的输出文件中。

RoBERTa TASE_IO + SSE

1 | allennlp train configs/drop/roberta/drop_roberta_large_TASE_IO_SSE.jsonnet -s training_directory -f --include-package src |

服务器运行:

1 | nohup allennlp train configs/drop/roberta/drop_roberta_large_TASE_IO_SSE.jsonnet -s training_directory_base -f --include-package src >> base_log.out 2>&1 & |

或:

1 | allennlp train download_data/config.json -s training_directory --include-package src |

Bert_large TASE_BIO + SSE

-f :可以清空训练数据文件夹,重新训练

-r:可以从之前的训练状态恢复

1 | allennlp train configs/drop/bert/drop_bert_large_TASE_BIO_SSE.jsonnet -s training_directory_bert -f --include-package src |

服务器运行:

1 | nohup allennlp train configs/drop/bert/drop_bert_large_TASE_BIO_SSE.jsonnet -s training_directory_bert -f --include-package src >> bertlog.out 2>&1 & |

预测模型

cuda-device 只能使用一个GPU

后文详细介绍

评估模型

后文详细介绍

预训练模型——替换为本地文件

定位技巧:

1 | find 路径 | grep -ri “字符串” -l |

下载文件:

1 | proxy wget https://s3.amazonaws.com/models.huggingface.co/bert/bert-base-uncased-vocab.txt |

快速下载文件技巧:

由于python中无法执行proxy,报错

sh: 1: proxy: not found只能通过手动方式进行,故编写如下脚本,实现命令生成,打印,复制打印的内容并执行可实现批量下载。

1 | import os |

执行结果:

1 | proxy wget https://s3.amazonaws.com/models.huggingface.co/bert/bert-base-uncased-config.json |

涉及文件:

1 | /data0/maqi/.conda/envs/allennlp/lib/python3.6/site-packages/transformers/tokenization_roberta.py |

- 各服务器路径

| 服务器 | 路径 |

|---|---|

| 202.199.6.77 | /data0/maqi |

| 219.216.64.206 | /data0/maqi |

| 219.216.64.175 | |

| 219.216.64.154 |

1 | scp /data0/maqi/.conda/envs/allennlp/lib/python3.6/site-packages/transformers/tokenization_roberta.py maqi@202.199.6.77:/data0/maqi/.conda/envs/allennlp/lib/python3.6/site-packages/transformers/tokenization_roberta.py |

- tokenization_roberta.py

1 | vim /data0/maqi/.conda/envs/allennlp/lib/python3.6/site-packages/transformers/tokenization_roberta.py |

原始:

1 | PRETRAINED_VOCAB_FILES_MAP = { |

替换:

1 | PRETRAINED_VOCAB_FILES_MAP = { |

- modeling_roberta.py

1 | vim /data0/maqi/.conda/envs/allennlp/lib/python3.6/site-packages/transformers/modeling_roberta.py |

原始:

1 | ROBERTA_PRETRAINED_MODEL_ARCHIVE_MAP = { |

替换:

1 | ROBERTA_PRETRAINED_MODEL_ARCHIVE_MAP = { |

- configuration_roberta.py

1 | vim /data0/maqi/.conda/envs/allennlp/lib/python3.6/site-packages/transformers/configuration_roberta.py |

原始:

1 | ROBERTA_PRETRAINED_CONFIG_ARCHIVE_MAP = { |

替换:

1 | ROBERTA_PRETRAINED_CONFIG_ARCHIVE_MAP = { |

- tokenization_bert.py

原始:

1 | PRETRAINED_VOCAB_FILES_MAP = { |

替换:

本地文件路径:/data0/maqi/pretrained_model/tokenization_bert

1 | PRETRAINED_VOCAB_FILES_MAP = { |

- configuration_bert.py

1 | vim /data0/maqi/.conda/envs/allennlp/lib/python3.6/site-packages/transformers/configuration_bert.py |

原始:

1 | BERT_PRETRAINED_CONFIG_ARCHIVE_MAP = { |

替换:

本地文件:/data0/maqi/pretrained_model/configuration_bert

1 | BERT_PRETRAINED_CONFIG_ARCHIVE_MAP = { |

- modeling_bert.py

1 | vim /data0/maqi/.conda/envs/allennlp/lib/python3.6/site-packages/transformers/modeling_bert.py |

原始:

1 | BERT_PRETRAINED_MODEL_ARCHIVE_MAP = { |

替换:

本地路径:/data0/maqi/pretrained_model/modeling_bert

1 | BERT_PRETRAINED_MODEL_ARCHIVE_MAP = { |

执行流程

针对tag-based-multi-span-extraction/configs/drop/roberta/drop_roberta_large_TASE_IO_SSE.jsonnet分析

dataset_reader

“is_training”: true,设置为训练模式

1 | "dataset_reader": { |

- model

1 | "model": { |

- 数据集

1 | "train_data_path": "drop_data/drop_dataset_train.json", |

trainer

“cuda_device”: -1,表示使用cpu

1 | "trainer": { |

validation_dataset_reader

“is_training”: false,设置为评估

1 | "validation_dataset_reader": { |

预测

训练模型打包为model.tar.gz

1 | allennlp predict training_directory/model.tar.gz drop_data/drop_dataset_dev.json --predictor machine-comprehension --cuda-device 0 --output-file predictions.jsonl --use-dataset-reader --include-package src |

预测结果保存在根目录的predictions.jsonl

评估

DROP

1 | allennlp evaluate training_directory/model.tar.gz drop_data/drop_dataset_dev.json --cuda-device 3 --output-file eval.json --include-package src |

BERT

1 | allennlp evaluate training_directory_bert/model.tar.gz drop_data/drop_dataset_dev.json --cuda-device 1 --output-file eval_bert.json --include-package src |

预测结果保存在根目录的eval.json

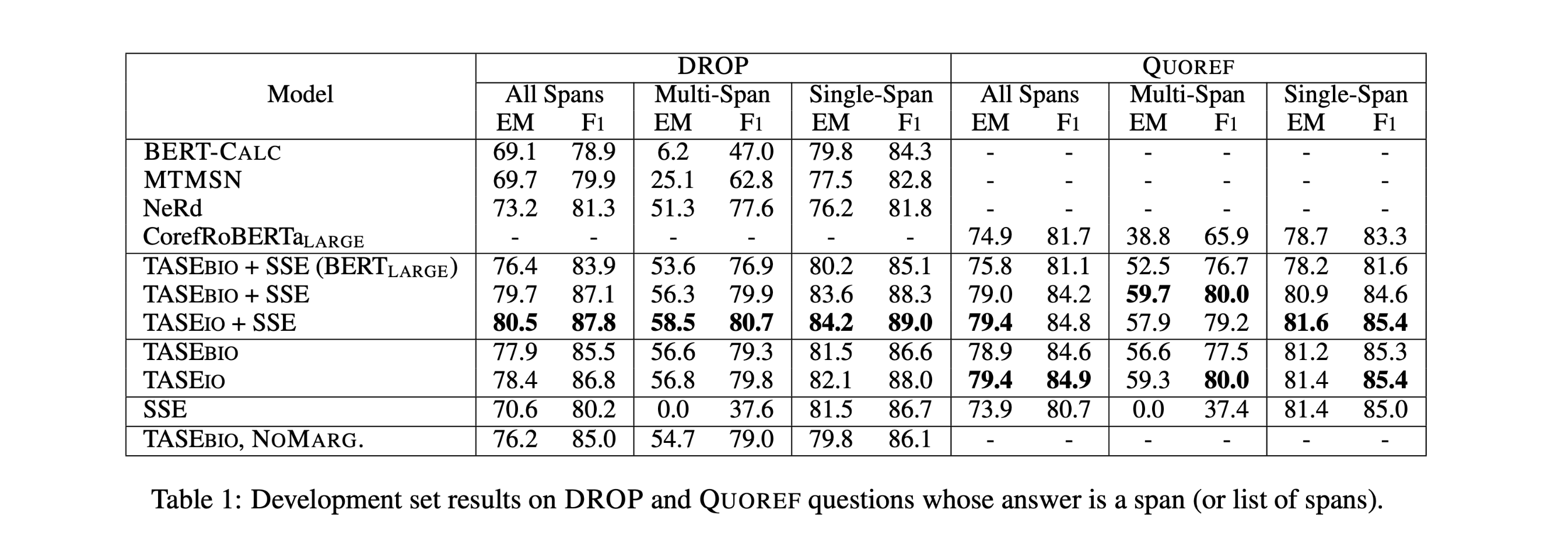

评估结果——DROP

TASE_IO+SSE

| em_all_spans | f1_all_spans | em_multi_span | f1_multi_span | em_span | f1_span |

|---|---|---|---|---|---|

| 80.6 | 87.8 | 60.8 | 82.6 | 84.2 | 89.0 |

TASE_IO+SSE(BLOCK)

| em_all_spans | f1_all_spans | em_multi_span | f1_multi_span | em_span | f1_span |

|---|---|---|---|---|---|

| 55.3 | 62.8 | 0 | 0 | 56.5 | 64.2 |

TASE_IO+SSE(BERT_large)

| em_all_spans | f1_all_spans | em_multi_span | f1_multi_span | em_span | f1_span |

|---|---|---|---|---|---|

| 76.4 | 83.9 | 54.5 | 80.1 | 80.7 | 85.2 |

TASE_IO+SSE(只对包含答案的句子做IO标记)

| em_all_spans | f1_all_spans | em_multi_span | f1_multi_span | em_span | f1_span |

|---|---|---|---|---|---|

| 57.8 | 64.5 | 16.7 | 23.3 | 58.1 | 64.2 |

论文结果: