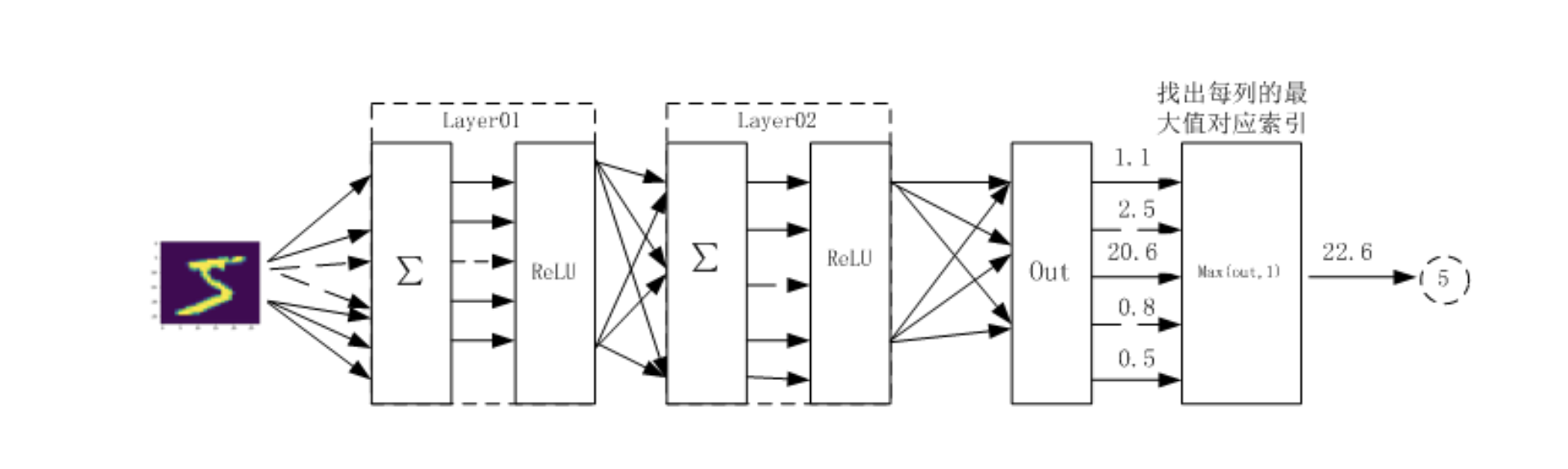

利用神经网络完成对手写数字进行识别

两个隐藏层

1. 准备数据 1 2 3 4 5 6 7 8 9 10 11 12 13 import osimport numpy as npimport matplotlib.pyplot as pltimport torchfrom torchvision.datasets import mnistimport torchvision.transforms as transformsfrom torch.utils.data import DataLoaderimport torch.nn.functional as Fimport torch.optim as optimfrom torch import nn

2. 定义一些超参数 1 2 3 4 5 6 7 train_batch_size = 64 test_batch_size = 128 learning_rate = 0.01 num_epoches = 20 lr = 0.01 momentum = 0.5

3. 下载数据并对数据进行预处理 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 transform = transforms.Compose( [transforms.ToTensor(), transforms.Normalize([0.5 ], [0.5 ])]) is_downloda = True if os.path.exists('./data/MNIST' ): is_downloda = False train_dataset = mnist.MNIST( './data' , train=True , transform=transform, download=is_downloda) test_dataset = mnist.MNIST('./data' , train=False , transform=transform) train_loader = DataLoader( train_dataset, batch_size=train_batch_size, shuffle=True ) test_loader = DataLoader( test_dataset, batch_size=test_batch_size, shuffle=False )

Normalize([0.5], [0.5])对张量进行归一化,这里两个0.5分别表示对张量进行归一化的全局平均值和方差。因图像是灰色的只有一个通道,如果有多个通道,需要有多个数字,如三个通道,应该是Normalize([m1,m2,m3], [n1,n2,n3])

用DataLoader得到生成器,可节省内存。

4. 可视化源数据 1 2 3 4 5 6 7 8 9 10 11 12 13 14 examples = enumerate (test_loader) batch_idx, (example_data, example_targets) = next (examples) fig = plt.figure() for i in range (6 ): plt.subplot(2 , 3 , i+1 ) plt.tight_layout() plt.imshow(example_data[i][0 ], cmap='gray' , interpolation='none' ) plt.title("Ground Truth: {}" .format (example_targets[i])) plt.xticks([]) plt.yticks([])

5. 构建模型 5.1 构建网络 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 class Net (nn.Module ): """ 使用sequential构建网络,Sequential()函数的功能是将网络的层组合到一起 """ def __init__ (self, in_dim, n_hidden_1, n_hidden_2, out_dim ): super (Net, self).__init__() self.layer1 = nn.Sequential( nn.Linear(in_dim, n_hidden_1), nn.BatchNorm1d(n_hidden_1)) self.layer2 = nn.Sequential( nn.Linear(n_hidden_1, n_hidden_2), nn.BatchNorm1d(n_hidden_2)) self.layer3 = nn.Sequential(nn.Linear(n_hidden_2, out_dim)) def forward (self, x ): x = F.relu(self.layer1(x)) x = F.relu(self.layer2(x)) x = self.layer3(x) return x

5.2 实例化网络 1 2 3 4 5 6 7 8 9 10 11 print ("GPU是否可用" , torch.cuda.is_available())device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu" ) model = Net(28 * 28 , 300 , 100 , 10 ) model.to(device) criterion = nn.CrossEntropyLoss() optimizer = optim.SGD(model.parameters(), lr=lr, momentum=momentum)

GPU是否可用 False

6. 训练模型 6.1 训练模型 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 losses = [] acces = [] eval_losses = [] eval_acces = [] for epoch in range (num_epoches): train_loss = 0 train_acc = 0 model.train() if epoch % 5 == 0 : optimizer.param_groups[0 ]['lr' ] *= 0.1 for img, label in train_loader: img = img.to(device) label = label.to(device) img = img.view(img.size(0 ), -1 ) out = model(img) loss = criterion(out, label) optimizer.zero_grad() loss.backward() optimizer.step() train_loss += loss.item() _, pred = out.max (1 ) num_correct = (pred == label).sum ().item() acc = num_correct / img.shape[0 ] train_acc += acc losses.append(train_loss / len (train_loader)) acces.append(train_acc / len (train_loader)) eval_loss = 0 eval_acc = 0 model.eval () for img, label in test_loader: img = img.to(device) label = label.to(device) img = img.view(img.size(0 ), -1 ) out = model(img) loss = criterion(out, label) eval_loss += loss.item() _, pred = out.max (1 ) num_correct = (pred == label).sum ().item() acc = num_correct / img.shape[0 ] eval_acc += acc eval_losses.append(eval_loss / len (test_loader)) eval_acces.append(eval_acc / len (test_loader)) print ('epoch: {}, Train Loss: {:.4f}, Train Acc: {:.4f}, Test Loss: {:.4f}, Test Acc: {:.4f}' .format (epoch, train_loss / len (train_loader), train_acc / len (train_loader), eval_loss / len (test_loader), eval_acc / len (test_loader)))

epoch: 0, Train Loss: 1.0274, Train Acc: 0.7921, Test Loss: 0.5553, Test Acc: 0.9008

epoch: 1, Train Loss: 0.4833, Train Acc: 0.8995, Test Loss: 0.3530, Test Acc: 0.9259

epoch: 2, Train Loss: 0.3504, Train Acc: 0.9187, Test Loss: 0.2769, Test Acc: 0.9384

epoch: 3, Train Loss: 0.2838, Train Acc: 0.9328, Test Loss: 0.2301, Test Acc: 0.9483

epoch: 4, Train Loss: 0.2431, Train Acc: 0.9408, Test Loss: 0.1980, Test Acc: 0.9530

epoch: 5, Train Loss: 0.2202, Train Acc: 0.9463, Test Loss: 0.1964, Test Acc: 0.9534

epoch: 6, Train Loss: 0.2188, Train Acc: 0.9464, Test Loss: 0.1913, Test Acc: 0.9537

epoch: 7, Train Loss: 0.2159, Train Acc: 0.9471, Test Loss: 0.1882, Test Acc: 0.9551

epoch: 8, Train Loss: 0.2131, Train Acc: 0.9487, Test Loss: 0.1869, Test Acc: 0.9540

epoch: 9, Train Loss: 0.2107, Train Acc: 0.9490, Test Loss: 0.1835, Test Acc: 0.9559

epoch: 10, Train Loss: 0.2081, Train Acc: 0.9500, Test Loss: 0.1846, Test Acc: 0.9552

epoch: 11, Train Loss: 0.2090, Train Acc: 0.9492, Test Loss: 0.1855, Test Acc: 0.9558

epoch: 12, Train Loss: 0.2091, Train Acc: 0.9483, Test Loss: 0.1841, Test Acc: 0.9558

epoch: 13, Train Loss: 0.2076, Train Acc: 0.9493, Test Loss: 0.1871, Test Acc: 0.9544

epoch: 14, Train Loss: 0.2066, Train Acc: 0.9491, Test Loss: 0.1833, Test Acc: 0.9562

epoch: 15, Train Loss: 0.2086, Train Acc: 0.9491, Test Loss: 0.1837, Test Acc: 0.9560

epoch: 16, Train Loss: 0.2074, Train Acc: 0.9496, Test Loss: 0.1827, Test Acc: 0.9559

epoch: 17, Train Loss: 0.2076, Train Acc: 0.9488, Test Loss: 0.1835, Test Acc: 0.9559

epoch: 18, Train Loss: 0.2076, Train Acc: 0.9494, Test Loss: 0.1845, Test Acc: 0.9558

epoch: 19, Train Loss: 0.2074, Train Acc: 0.9497, Test Loss: 0.1844, Test Acc: 0.9551

6.2 可视化训练及测试损失值 1 2 3 4 5 6 7 8 9 10 plt.title('train loss' ) plt.subplot(1 ,2 ,1 ) plt.plot(np.arange(len (losses)), losses) plt.legend(['Train Loss' ], loc='upper right' ) plt.subplot(1 ,2 ,2 ) plt.title('train acces' ) plt.plot(np.arange(len (acces)),acces,color='red' , label='acces' ) plt.legend(['Train acces' ], loc='upper right' )