使用Numpy实现机器学习

使用Numpy实现机器学习

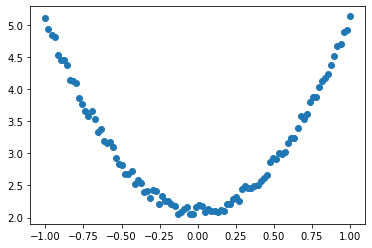

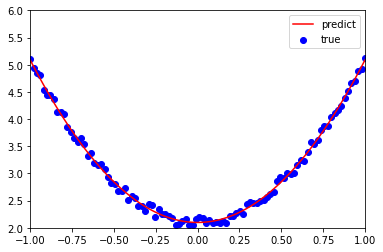

表达式:$y=3x^2+2$

模型:$y=wx^2+b$

损失函数:$Loss=\frac{1}{2}\sum_{i=1}^{100}(wx^2_i+b-y_i)^2$

对损失函数求导:

$\frac{\partial Loss}{\partial w}=\sum_{i=1}^{100}(wx^2_i+b-y_i)^2x^2_i$

$\frac{\partial Loss}{\partial b}=\sum_{i=1}^{100}(wx^2_i+b-y_i)^2$

利用梯度下降法学习参数,学习率为:lr

$w_1-=lr*\frac{\partial Loss}{\partial w}$

$b_1-=lr*\frac{\partial Loss}{\partial b}$

1 | import numpy as np |

1.生成训练数据

1 |

|

2.查看x,y分布

1 | plt.scatter(x, y) |

3.初始化权重参数

1 | # 随即初始化参数 |

4.求解模型

1 | lr = 0.001 |

5.结果可视化

1 | plt.plot(x, y_pred, 'r-', label='predict') |

[[2.98927619]] [[2.09818307]]

本博客所有文章除特别声明外,均采用 CC BY-NC-SA 4.0 许可协议。转载请注明来自 没有胡子的猫Asimok!

评论